😀😀😀

Our work has been selected as SIGGRAPH 2024 Technical Papers Trailer !

😀😀😀

The Trailer

Deep Fourier-based Arbitrary-scale Super-resolution for Real-time Rendering

Haonan Zhang*1, Jie Guo*1, Jiawei Zhang1, Haoyu Qin1, Zesen Feng1, Ming Yang1, Yanwen Guo†1

1State Key Lab for Novel Software Technology, Nanjing University

*Joint first authors

†Corresponding author

Abstract

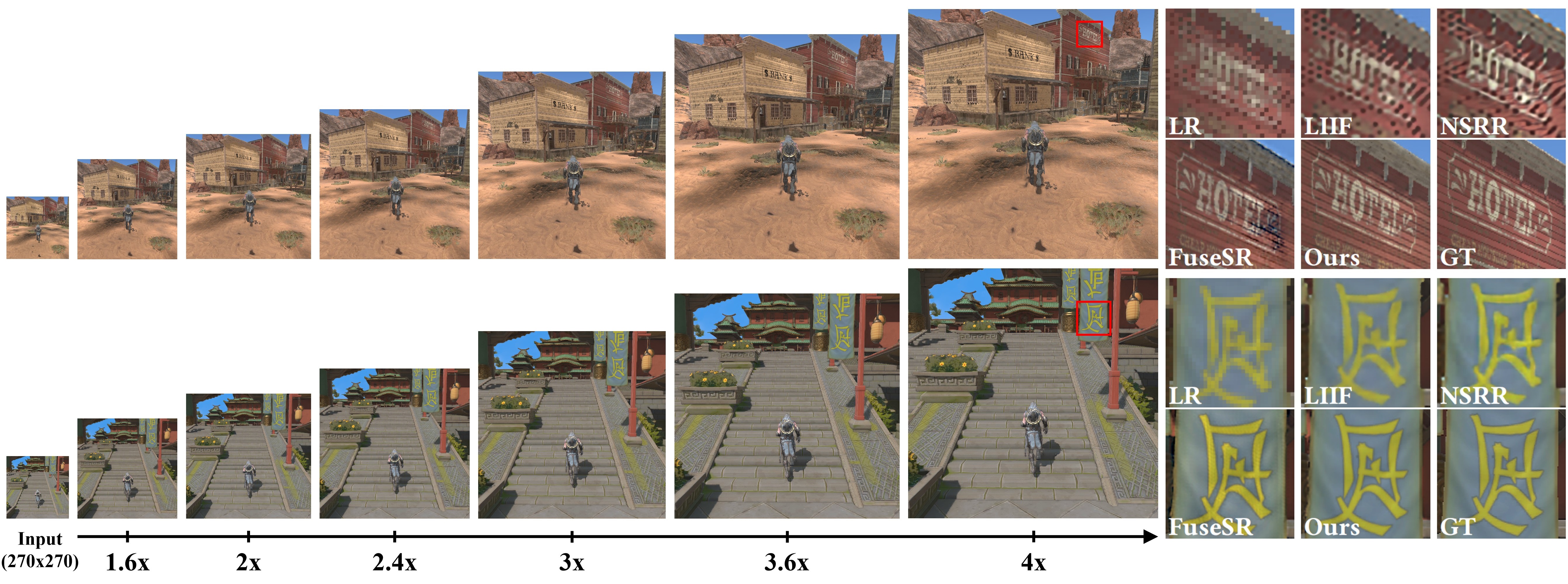

As a prevailing tool for effectively reducing rendering costs in many graphical applications, frame super-resolution has seen important progress in recent years. However, most of prior works designed for rendering contents face a common limitation: once a model is trained, it can only afford a single fixed scale. In this paper, we attempt to eliminate this limitation by supporting arbitrary-scale super-resolution for a trained neural model. The key is a Fourier-based implicit neural representation which maps arbitrary and naturally coordinates in the high-resolution spatial domain to valid pixel values. By observing that high-resolution G-buffers possess similar spectrum to high-resolution rendered frames, we design a High-Frequency Fourier Mapping (HFFM) module to recover fine details from low-resolution inputs, without introducing noticeable artifacts. A Low-Frequency Residual Learning (LFRL) strategy is adopted to preserve low-frequency structures and ensure low biasedness caused by network inference. Moreover, different rendering contents are well separated by our spatial-temporal masks derived from G-buffers and motion vectors. Several light-weight designs to the neural network guarantee the real-time performance on a wide range of scenes.

Network Architecture

Runtime Comparison